Benchmarking Uncertainty Quantification for Deep Learning

By: Dristanta Silwal Email: silw8620@vandals.uidaho.edu

Home Town: Kathmandu, Bagmati High School: NIST

Major: Computer Science

Department: Computer Science

College: College of Engineering

Imagine you’re in a self-driving car, and a person suddenly appears in front of it. The car fails to brake—and that person gets hurt. Not because you made a mistake, but because the AI failed to detect that person. Worse, it was confident in its decision and didn’t even slow down.

Recent research shows that even advanced AI models can be overconfident in their predictions—even when they’re wrong.

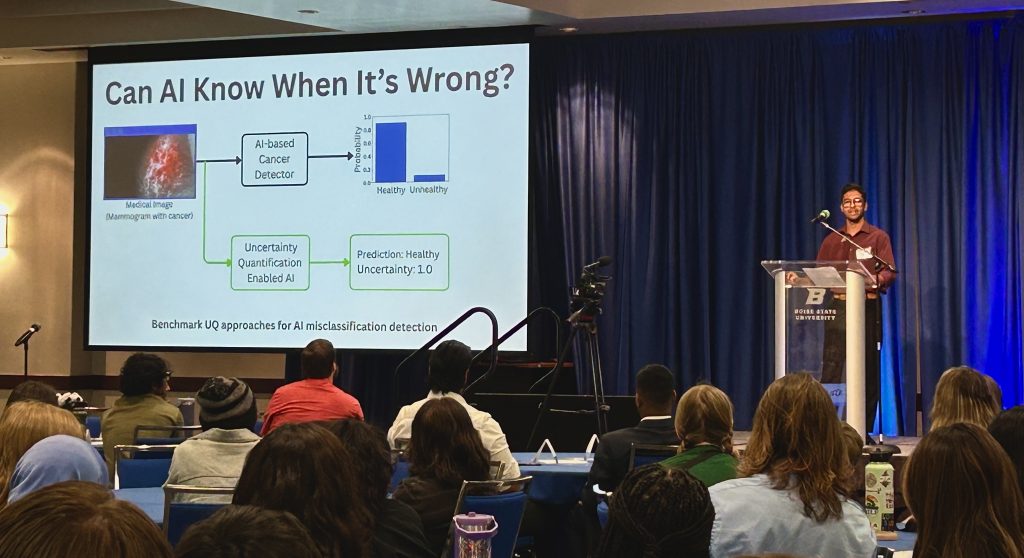

Now let’s look at another high-stakes example—this one in healthcare. What you’re seeing here is a mammogram for cancer. The AI-based detector looks at this image and confidently predicts “Healthy.” As the bar chart shows, it’s over 90% certain. But in reality, the patient has cancer—discovered months later by a real doctor. This is the danger of confident mistakes.

This is where my research comes in. I study something called Uncertainty Quantification, or UQ. In simple terms, UQ helps AI say, “I might be wrong—and here’s how unsure I am.” My research focuses on benchmarking UQ methods for misclassification detection—helping us recognize when AI is wrong and how confident it is in those wrong answers.

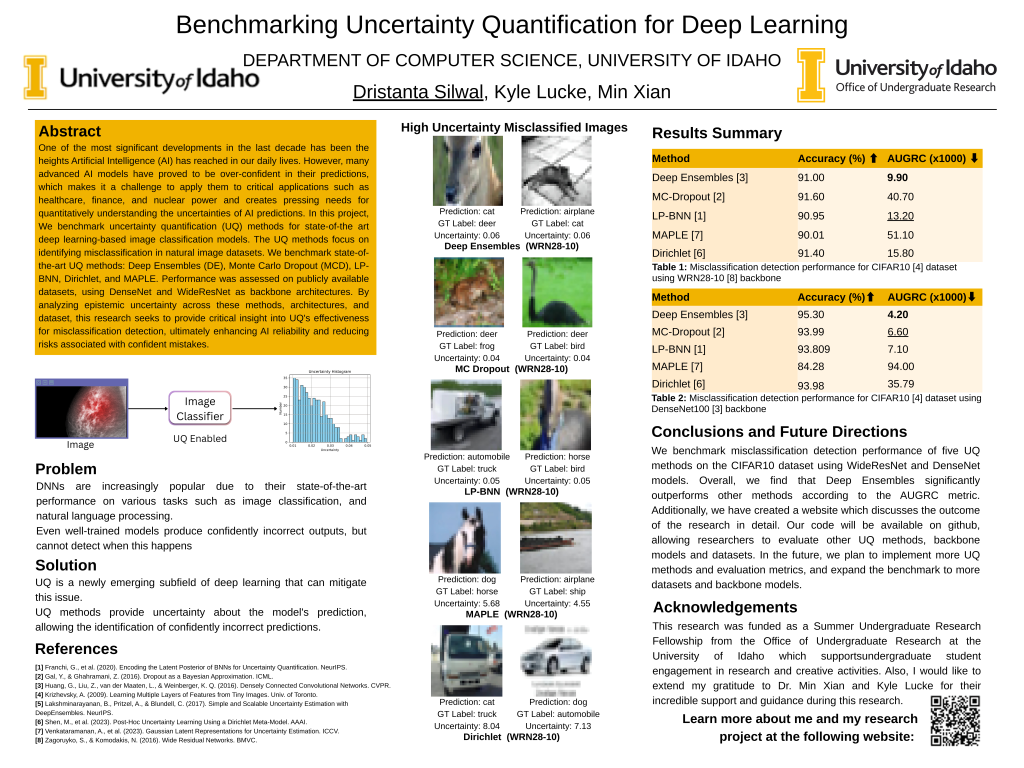

Methods

To explore this, we benchmarked five popular UQ methods on CIFAR-10, a widely used image classification dataset, using two backbone models: DenseNet and WideResNet. Let me give you a simple analogy for few of the method:

Deep Ensembles are like asking multiple doctors for their opinion. If they all agree—you feel confident. If they disagree—you might seek more tests.

MC Dropout is like asking the same doctor multiple times, each time under slightly different conditions—to see if their answer remains consistent.

Results

Our results showed that Deep Ensembles were the most effective at identifying confidently incorrect predictions. That result tells us: “I made a decision, and how sure I am with my prediction.” That kind of signal is crucial. It allows doctors to double-check results—potentially preventing misdiagnosis or worse.

Conclusion

This work has the potential to impact critical domains like healthcare, education, and transportation—where confident mistakes aren’t just wrong—they’re dangerous. If AI can say “I’m not sure,” it gives us—the humans—a chance to pause, assess, and step in before it’s too late. In the future, I’ll continue expanding this research to build a unified framework that enables practical Uncertainty Quantification for real-world AI applications.

Thank you.